GROMACS 4.6 example: Ethanol solvation with expanded ensemble

| Free Energy Fundamentals |

|---|

|

|

Methods of Free Energy Simulations

|

| Free Energy How-to's |

|---|

|

Setting up the calculation with Gromacs

This tutorial assumes knowledge of [Gromacs]. Make sure you actually know how to use Gromacs first.

Setting up the calculations are very similar to standard free energy calculations; you can review a tutorial here for the standard way to perform the simulation. The topology and gro files are exactly the same; only the mdp files and the calls to the programs change.

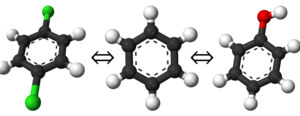

In this particular case, we again set up nine intermediate states defined to perform the calculation of the absolute solvation free energy of ethanol (also known as the chemical potential at infinite dilution). However, you will only need to run one simulations, as the simulation will go back and forth between the different states.

So, start with the same (ethanol.gro) and (ethanol.top) and a new mdp file (expanded.mdp) files.

Running the calculation with Gromacs

Run grompp and mdrun as normal. Specifically:

grompp -f expanded.mdp -c ethanol.gro -p ethanol.top -o ethanol.X.tpr -maxwarn 4

There may be some warnings, and you'll need to override, hence -maxwarn.

For mdrun, we simply run:

mdrun -deffnm ethanol.X -dhdl ethanol.X.dhdl.xvg

The number of threads can be set as with any other simulation. If you don't set the dhdl file independently, it will be saved to ethanol.X.xvg,

Strictly, you will only need the dhdl files, but looking at the other output files can be useful to determine what is going on in the system if something goes wrong.

Analyze the calculation with Gromacs

After you have generated the dhdl files, you can then analyze them using pymbar with the dhdl output.

You will need to install [[1]]. alchemical-gromacs.py will be in examples directory.

The correct invocation is:

python alchemical-gromacs.py -f directory/prefix -t 300 -p 1 -n 2500 -v > outpufile

'-t' is temperature, '-p' is pressure, '-f' is (prefix of the files, including directory), and '-v' is verbose output (not required, but helpful to understand!)

It will use all files it finds with the given prefix, and it will assume they are numbered in order. In this case, there should only be one file. If there are multiple expanded ensemble files, it will analyze all the data together. We omit the first 500 ps (0.2 ps per sample) for equilibration (likely overkill for this problem, but better safe than sorry).

You may see the following warnings:

Warning on use of the timeseries module: If the inherent timescales of the system are long compared to those being analyzed, this statistical inefficiency may be an underestimate. The estimate presumes the use of many statistically independent samples Tests should be performed to assess whether this condition is satisfied. Be cautious in the interpretation of the data

This is a standard warning -- the correlation time code will be an underestimate if there are correlation times that are long with respect to the simulation time. In this case, there aren't. With proteins, there probably are.

/Users/mrshirts/work/papers/MBARFORDUMMIES/pymbar/trunk/pymbar/pymbar.py:308: RuntimeWarning: overflow encountered in exp

log_f_R = - max_arg_R - numpy.log( numpy.exp(-max_arg_R) + numpy.exp(exp_arg_R - max_arg_R) ) - w_R

/Users/mrshirts/work/papers/MBARFORDUMMIES/pymbar/trunk/pymbar/pymbar.py:494: RuntimeWarning: overflow encountered in exp

fR = 1/(1+numpy.exp(w_R - C))

These warnings can pop up because we are using BAR initialization in the algorithm, which is a bit faster (not much). It will default to zeros, which is fine. As long as the MBAR calculation converges OK, these warnings can be ignored.

Understanding the analysis

Let's look at the output file:

The number of files read in for processing is: 1 output is verbose Reading metadata from solvation_direct/outputs/dhdls/ethanol_expanded.dhdl.xvg... Done reading metadata from solvation_direct/outputs/dhdls/ethanol_expanded.dhdl.xvg... Reading solvation_direct/outputs/dhdls/ethanol_expanded.dhdl.xvg...

All standard stuff saying what it's doing. The next part is important. pymbar determines how many of the samples are statistically uncorrelated, and only uses every [math]\displaystyle{ 1/\tau }[/math] samples.

Now computing correlation times Correlation times: [ 1.13024145 1.53302243 1.43612724 1.08803448 1.55534344 1.37338905 1.40559608 1.08837468 1.083193 ]

number of uncorrelated samples: [24331 17939 19149 25275 17681 20024 19565 25267 25388]

The next part is initalization information. We use the (faster, ignores information Bennett's acceptance ratio to get a quick estimate of the free energies between states, and refine it with multistate Bennett's acceptance ratio. For simple calculations, probably overkill.

relative_change = 1.000 iteration 0 : DeltaF = 4.609 relative_change = 0.000 iteration 1 : DeltaF = 4.609 relative_change = 0.000 Convergence achieved. Converged to tolerance of 8.439389e-13 in 2 iterations (5 function evaluations) DeltaF = 4.609 +- 0.007

Goes on like that for a while. Then we actually start the MBAR calculation. Some diagnostic information at the beginning.

Computing free energy differences... Using embedded C++ helper code. K = 9, L = 9, N_max = 27500, total samples = 194619 There are 9 states with samples. N_k = [24331 17939 19149 25275 17681 20024 19565 25267 25388] Initial dimensionless free energies with method BAR

This is starting iteration. These f_k's are free energies times [math]\displaystyle{ \beta }[/math], so they are dimensionless. Don't get confused between these values and the final values for the free energies of each state.

f_k =

[ 0. 4.60854858 8.63821596 10.52813716 12.39909138

13.90581448 14.60066295 12.653474 7.08947627]

Determining dimensionless free energies by Newton-Raphson iteration.

self consistent iteration gradient norm is 274.37, Newton-Raphson gradient norm is 0.001627

Choosing self-consistent iteration on iteration 0

current f_k for states with samples =

[ 0. 4.60851302 8.63587897 10.52784259 12.39754897

13.90747094 14.60015956 12.65309141 7.08891004]

relative max_delta = 1.600662e-04

self consistent iteration gradient norm is 61.179, Newton-Raphson gradient norm is 1.0307e-05

Choosing self-consistent iteration for lower gradient on iteration 1

current f_k for states with samples =

[ 0. 4.60810077 8.63487764 10.52740478 12.39729092

13.90745661 14.60018318 12.65278436 7.08849961]

relative max_delta = 6.858353e-05

self consistent iteration gradient norm is 15.488, Newton-Raphson gradient norm is 4.3297e-07

Newton-Raphson used on iteration 2

And it goes on like that for a while. It switched to Newton-Raphson once it appears to be converging. This might take 1 step, it might take 5-10 steps. Once it switches to Newton-Raphson, it will go very quickly.

Soon (should just be a few seconds!) we get:

Converged to tolerance of 3.175483e-14 in 5 iterations.

Of 5 iterations, 3 were Newton-Raphson iterations and 2 were self-consistent iterations

Recomputing all free energies and log weights for storage

Final dimensionless free energies

f_k =

[ 0. 4.60763423 8.63398184 10.52688519 12.39691766

13.9073167 14.6002695 12.65255384 7.08801044]

MBAR initialization complete.

Now let's get to the the results:

First, it prints "Deltaf_ij". These are the values of [math]\displaystyle{ \beta\Delta G_{ij} }[/math]. It's a 9x9 matrix, because there are 9 states. The diagonal is zero, because the free energy difference between a state and itself is zero.

Deltaf_ij:

[[ 0. 4.60763423 8.63398184 10.52688519 12.39691766

13.9073167 14.6002695 12.65255384 7.08801044]

[ -4.60763423 0. 4.02634761 5.91925096 7.78928344

9.29968247 9.99263527 8.04491961 2.48037622]

Next, "dDeltaf_ij" the uncertainties in Deltaf_ij

dDeltaf_ij:

[[ 0. 0.00647308 0.01354042 0.01840233 0.0185153 0.01912802

0.02082696 0.02560051 0.0281657 ]

[ 0.00647308 0. 0.00916897 0.01537218 0.01550672 0.01623309

0.0182042 0.02351631 0.02628569]

Finally, the full the results. Six different methods to compare! The rows only run from 0 to 7, because there are only 8 free energy DIFFERENCES -- 0 to 1, 1 to 2, etc.

TI (kJ/mol) TI-CUBIC (kJ/mol) DEXP (kJ/mol) IEXP (kJ/mol) BAR (kJ/mol) MBAR (kJ/mol)

0: 11.546 +- 0.016 11.516 +- 0.017 11.475 +- 0.031 11.588 +- 0.041 11.498 +- 0.017 11.496 +- 0.016

1: 10.326 +- 0.023 10.071 +- 0.026 10.085 +- 0.057 10.009 +- 0.088 10.054 +- 0.024 10.046 +- 0.023

2: 5.416 +- 0.027 4.706 +- 0.040 4.688 +- 0.106 4.961 +- 0.296 4.715 +- 0.027 4.723 +- 0.022

3: 4.716 +- 0.015 4.751 +- 0.015 4.922 +- 0.049 4.665 +- 0.011 4.668 +- 0.010 4.666 +- 0.007

4: 3.679 +- 0.012 3.686 +- 0.013 3.811 +- 0.029 3.746 +- 0.013 3.759 +- 0.011 3.768 +- 0.009

5: 1.419 +- 0.017 1.965 +- 0.020 1.604 +- 0.170 1.759 +- 0.022 1.734 +- 0.016 1.729 +- 0.015

6: -5.418 +- 0.023 -5.320 +- 0.026 -5.115 +- 0.207 -4.947 +- 0.062 -4.858 +- 0.031 -4.859 +- 0.031

7: -13.178 +- 0.021 -13.654 +- 0.025 -13.911 +- 0.048 -13.676 +- 0.169 -13.882 +- 0.020 -13.883 +- 0.020

------------------- ------------------- ------------------- ------------------- ------------------- -------------------

TOTAL: 18.505 +- 0.186 17.721 +- 0.198 17.559 +- 0.305 18.104 +- 0.361 17.688 +- 0.059 17.684 +- 0.070

Things to note: with 9 states, TI is not so good; it's about 1 kJ/mol away from the other values. If we use cubic interpolation to integrate, we improve the agreement with the other algorithms.

Exponential averaging in both directions, insertion and deletion (EXP and DEXP) are not that far off for this example, but the uncertainties are fairly large.

The values for BAR and MBAR are very close! But the uncertainty estimate of BAR is lower. it SEEMS like BAR is better, but that is actually because the BAR estimate is too low. If you ran the experiment lots of times, and computed the sample error you would get a result which is closer to 0.07 than 0.059. This is a known problem with BAR. The estimate of the pairwise differences (0-1, 1-2, 2-3) are all accurate, but the SUM is inaccurate, because the 0->1 and 1->2 calculations both share the same data set, data set 1.

MBAR is generally the lowest variance estimate for a given amount of sampling. In some special cases, TI can get lower, and is frequently almost as good IF enough intermediate states are included. BAR can be almost as good as MBAR, but the uncertainty estimate for the full calculation can frequently be too low.

These are very accurate calculations (in the case of MBAR). Note that they are correct to within 0.07 kJ/mol, which is 0.016 kcal/mol. This free energy can probably only be computed to within 0.05 kcal/mol experimentally.

Hamilton replica exchange

There is no point in doing expanded ensemble with Hamiltonian replica exchange -- it's ALREADY visiting all of the states.